The Portfolio Scalability Problem Facing Every Accelerator

"We can only review 24% of our projects."

This confession from an ecosystem manager isn't unique.

Across the blockchain industry, accelerators, venture funds, and ecosystem programs face the same ceiling: they can source deals faster than they can properly evaluate them.

The numbers tell the story:

- 87 active projects in the portfolio

- Only 21 receiving regular tokenomics review

- 66 projects operating as complete blind spots

The reason isn't incompetence or lack of effort. It's mathematics.

Manual tokenomics analysis requires 3-4 hours per project. For an 87-project portfolio, that's 348 hours monthly. That's equivalent to 9 full-time tokenomics specialists. And that's just for initial review, before considering ongoing monitoring, post-launch tracking, or emergency response when something breaks.

Most ecosystem managers hit this wall between 20-30 projects.

Beyond that point, scaling requires either:

- Hiring more specialized analysts (expensive, slow, inconsistent)

- Accepting blind spots in the portfolio (risky, unsustainable)

- Implementing systematic automation (the solution this article explores)

Why Hiring More Analysts Doesn't Solve the Problem

The Tokenomics Specialist Shortage

Quality tokenomics expertise is rare. These professionals need to understand:

- Economic game theory and incentive design

- Smart contract mechanics and blockchain infrastructure

- Financial modeling and stress testing

- Regulatory considerations across jurisdictions

- Community dynamics and behavioral economics

Finding candidates with this multi-disciplinary expertise takes months. Onboarding them takes longer.

The Consistency Problem

Even when you successfully hire specialists, each analyst brings different frameworks, different risk tolerances, and different blind spots.

Project A reviewed by Analyst 1 gets a different level of scrutiny than Project B reviewed by Analyst 2.

This inconsistency creates two problems:

- Quality variance: Some projects slip through with critical flaws

- Resource inefficiency: Different analysts spend vastly different amounts of time on similar projects

The Economics Don't Work

Senior tokenomics specialists command $150,000+ annually. For a 50-project portfolio requiring continuous monitoring:

- 5 specialists at $150K = $750,000/year in salary alone

- Add benefits, equipment, management overhead: ~$1,000,000 annual cost

- Result: Still only covers initial reviews, not ongoing monitoring

The math simply doesn't support scaling through headcount.

Auto-Import: From Spreadsheets to Systematic Analysis

Week 1: Instant Portfolio Mapping

Rather than hiring, one ecosystem manager implemented Tokenise's automated platform.

The first action: auto-importing all 87 existing token models.

The process took less than a day:

Export existing Excel/Google Sheets token models

Drag and drop into the platform

System automatically maps:

- Token allocations (team, investors, treasury, community)

- Vesting schedules with cliff periods

- Emission curves and inflation rates

- Fundraising rounds and valuations

- Liquidity provisions

Result: Every allocation, vesting schedule, and emission curve instantly mapped. No manual data entry. No transcription errors. Same standardized format for every project.

Time investment: 90 seconds per project vs. 4 hours of manual modeling.

Week 2: Automated Red Flag Detection Reveals Hidden Risks

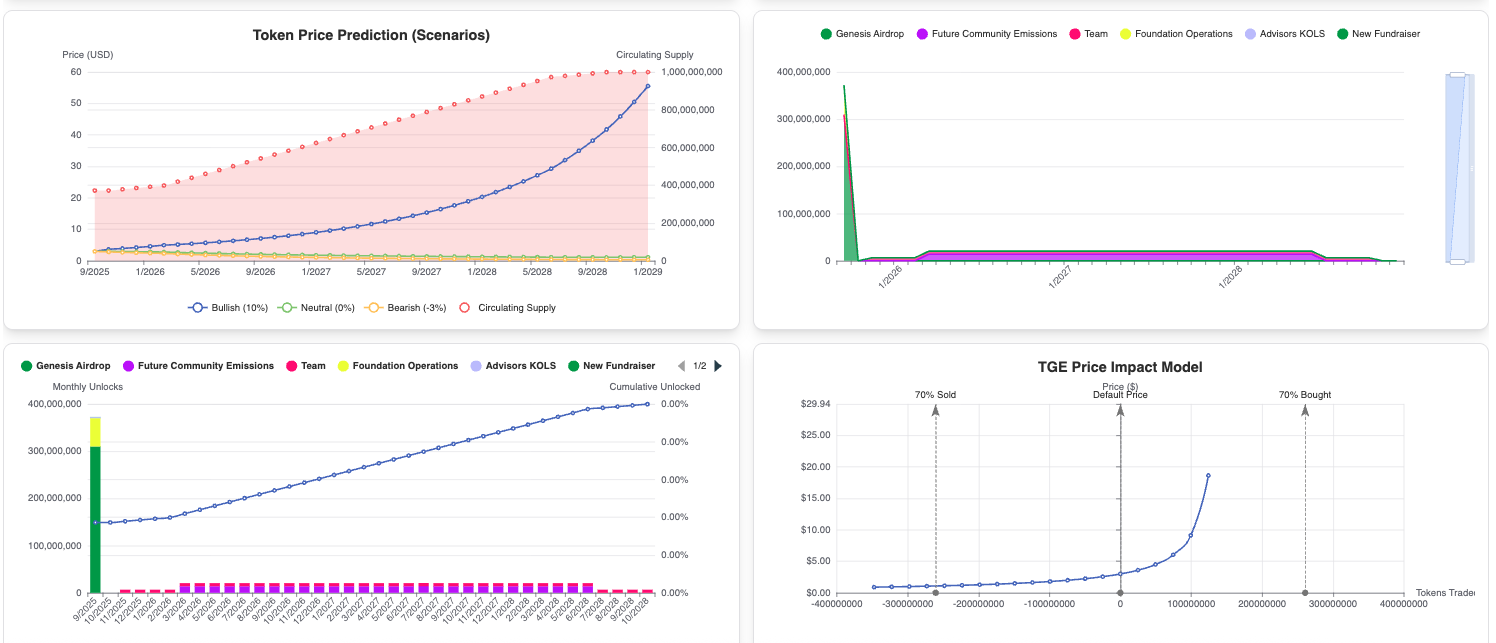

With all 87 projects mapped, the system ran automated health checks across seven critical metrics:

- Liquidity Depth: Liquidity pool size as % of market cap

- Token Velocity: How quickly tokens change hands

- Holder Concentration: Distribution across wallet addresses

- Emission-to-Revenue Ratio: Sustainability indicator

- Governance Participation: Community engagement levels

- Team Token Movement: Insider selling signals

- Price-Dependent Mechanisms: Death spiral vulnerabilities

The system flagged 12 projects with critical issues that had never been reviewed:

- 3 projects: Liquidity depth <3% of market cap (death spiral risk)

- 4 projects: Token velocity >20 on 30-day average (farm-and-dump pattern)

- 5 projects: Top 10 wallets controlling >45% of supply (concentration risk)

These 12 projects weren't in the manager's "priority 21."

Under manual review, they would never have been caught until problems became public.

Week 8: Early Warning System Prevents Disaster

One project that had passed initial review began showing concerning signals:

- Day 1-5: Token velocity increased from baseline 3.2 to 8.4

- Day 6-11: Velocity accelerated to 28.7 (789% increase)

- Day 11: Automated alert triggered to ecosystem manager

The pattern was clear: classic farm-and-dump behaviour. Users were claiming rewards and immediately selling, creating downward price pressure.

Manual review timeline: This would have been discovered 2-3 weeks later during the next scheduled review—after the damage was public and reputational harm done.

Automated timeline: Alert within 11 days, intervention before public awareness, farm-and-dump scenario prevented through adjusted reward emissions.

How Tokenise Enables 200-Project Portfolio Management

1. Auto-Import Token Models (90 Seconds, Not 4 Hours)

The Problem: Junior analysts spend 3-4 hours manually building token models from whitepapers, converting allocation tables, and mapping vesting schedules in spreadsheets.

The Solution: Drag existing Excel or Google Sheets files into the platform. System automatically:

- Maps all token allocations across stakeholder categories

- Identifies vesting schedules, cliff periods, and unlock events

- Charts emission curves and calculates inflation rates

- Flags any incomplete or inconsistent data for review

Time Savings: 96% reduction in model-building time (90 seconds vs. 4 hours)

2. Automated Red Flag Detection (7 Critical Metrics Monitored Continuously)

The Problem: Manual reviews depend on analyst memory, experience, and attention to detail. Critical metrics get overlooked. Standards vary between analysts.

The Solution: Every imported project automatically screened against critical thresholds:

Consistency Benefit: Every project evaluated against identical criteria, every time.

3. Portfolio-Wide Dashboard (See 200 Projects at Once)

The Problem: Ecosystem managers juggle 87 different spreadsheets, hoping they're updated. No unified view of portfolio health. Blind to comparative metrics.

The Solution: Single dashboard displaying:

- Color-coded health scores (Green/Yellow/Red) for every project

- Sortable by risk level, development stage, or ecosystem category

- Filterable by custom tags or project characteristics

- Comparative metrics across the entire portfolio

Instantly identify which projects need attention, which are performing well, and where resources should be allocated.

4. Standardized SOPs (Train Junior Team Members in 1 Week)

The Problem: Tokenomics expertise exists as tribal knowledge. Senior analysts take 6-12 months to onboard. Each develops their own frameworks. Quality varies wildly.

The Solution: Standardized review protocols embedded in platform:

- Same red flag criteria for every project

- Same health score methodology across portfolio

- Same reporting format and documentation requirements

- Automated checklists guide junior reviewers

Scalability Impact: Junior team members productive in 1 week, not 6 months. Consistent quality across 200 projects with 3-person team.

Scalable Math

Manual Approach (20 Projects - Maximum Sustainable)

Time Requirements:

- 4 hours per project review

- 20 projects × 4 hours = 80 hours/month

- Ongoing monitoring: +40 hours/month

- Total: 120 hours/month

Team Requirements:

- 2 full-time tokenomics specialists

- Annual cost: ~$300,000 (salaries + benefits)

Scalability Ceiling: Cannot efficiently scale beyond ~30 projects without additional hires

Tokenise Approach (200 Projects - Proven)

Time Requirements:

- 15 minutes per project review (initial import)

- 200 projects × 15 min = 50 hours/month

- Automated monitoring: 10 hours/month (alert response only)

- Total: 60 hours/month

Team Requirements:

- 1 portfolio manager + automated system

- Annual cost: ~$150,000 (salary)

Scalability Ceiling: 500+ projects with same team size (scales with technology, not headcount)

ROI Calculation

For a 100-project portfolio:

Manual Approach Cost:

- 5 specialists required

- Annual cost: $750,000

- Coverage: ~60% of portfolio (capacity constraints)

- Emergency rate: 8.2 incidents per quarter

Tokenise Approach Cost:

- 2 portfolio managers + platform

- Annual cost: $310,000

- Coverage: 100% of portfolio

- Emergency rate: <1 incidents per quarter

Annual Savings: $440,000 (59% cost reduction)Coverage Improvement: 40 additional projects monitoredRisk Reduction: 73% fewer emergencies

Your Ecosystem Doesn't Need More Analysts

The limiting factor for ecosystem growth isn't deal flow.

Builders are only going to continually flood the industry. (Which is great)

But for many, their comprehension of game theory and tokenomics is out of their wheel house. It's simply not feasable with so many other plates spinning. (Tech, KOLs, market making and so on)

Most ecosystem managers hit a ceiling around 20-30 active projects because manual analysis doesn't scale with headcount in a cost-effective way.

The solution isn't hiring more specialists. It's implementing systematic automation that:

- Auto-imports token models in 90 seconds

- Detects critical red flags automatically across key metrics.

- Monitors portfolio health in real-time, post-launch

- Provides unified visibility across 200+ projects

- Standardizes review processes for consistent quality

One ecosystem manager went from 24% portfolio coverage to 100% in 90 days. Same team size. 73% fewer emergencies. Zero additional headcount.

Your job isn't data entry. It's building unicorns.

Frequently Asked Questions (FAQs)

How long does it take to import existing token models?

Approximately 90 seconds per project. For a portfolio of 87 projects, complete import and initial analysis can be completed in less than an houree total, including QA review of flagged items.

What file formats does Tokenise support for auto-import?

Tokenise supports Excel (.xlsx, .xls) and Google Sheets. Most tokenomics models built in standard spreadsheet formats can be imported directly without reformatting.

How accurate is the automated red flag detection?

Based on analysis of 250+ token failures, our seven-metric framework detected warning signs in 83% of cases at least 30 days before public collapse. False positive rate: approximately 12%

What happens if a project doesn't have an existing token model?

The platform includes a visual model builder for creating token models from scratch. This takes approximately 30-45 minutes vs. 3-4 hours manually, as the interface guides you through required inputs and validates completeness.

How much training does my team need to use the platform effectively?

Junior team members can complete basic reviews within 1 week of training. Full platform proficiency (including advanced scenario modeling and custom alerts) typically achieved in 2-3 weeks.

Can I export data and reports from the platform?

Yes. All health scores, red flag analyses, and portfolio metrics can be exported to PDF or CSV formats for sharing with leadership, LPs, or ecosystem partners.

What if I need custom metrics or red flag criteria specific to my ecosystem?

The platform supports custom threshold configuration and can add ecosystem-specific metrics. Custom development requests are evaluated during the pilot phase to ensure alignment before commitment.

How does this compare to hiring a tokenomics consulting firm?

Consulting firms typically charge $15-25K per project for one-time analysis. Tokenise provides ongoing monitoring for 50 projects at $5-7K per quarter—equivalent to the cost of analyzing 1-2 projects traditionally.

Is the platform suitable for smaller accelerators with 10-15 projects?

Yes. While the scalability benefits are most pronounced at 30+ projects, smaller accelerators benefit from consistent quality, time savings, and post-launch monitoring even with smaller portfolios.